Wake Word Detection Demo

Background

In this post, I described how I setup a wake word detection model to take in audio input and determine whether or not the sequence contained the phrase "Hey Jarvis".

In this post, I'll describe how I setup the demo shared below so that it periodically takes in this information from the user's browser and sends it to the server to perform the same function.

Demo

Website Setup

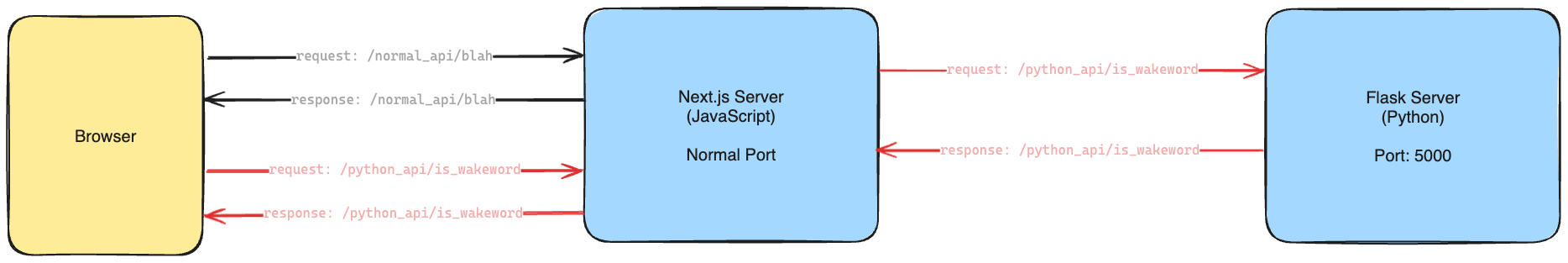

One of the first initial roadblocks I ran into when trying to setup this demo was that I've written this website using Next.js, but my wake word detection model was written in Python. So I needed some way to pass data from JavaScript to some piece of code running Python.

There are many ways to accomplish this, but what I found the easiest was to setup a Flask server first for my Python code. So when I ran my project, I had two server instances running, each on different ports, one for Next.js (my JavaScript backend) and one for Flask (my Python backend).

I then modified my next.config.js to use rewrites to route incoming requests for certain APIs to the Flask server if needed. This is what the code in the next.config.js file looked like:

const nextConfig = {

async rewrites() {

return [

{

source: '/python_api/:path',

destination: 'http://127.0.0.1:5000/:path'

}

]

}

}

This would cause all API requests to something that contained /python_api/ to be routed to the Flask server. Here is the process visualized:

Passing Data from Client to Server

Now that I had the server setup, I could focus on sending audio data from the client to the server. In order to do this, I used the MediaDevices and MediaRecorder API to record voice data from the browser. This link contains a comprehensive example that made up the bulk of my code. In the onStop function of the MediaRecorder, I would upload the audio data as a FormData in a POST method using the fetch API, similar to uploading a file to the server

The fetch API would route the request to /python_api/is_wakeword, and since we routed all requests to /python_api/* to the Flask server in the first part of this post, all these requests with the audio information as a file would be sent to the Python code in the Flask server.

The Python code in the Flask server would receive the audio data as a file, which can be saved to a temporary folder using Flask's API for handling file uploads. From here, the code would use torchaudio to load the file as a bunch of Tensors and feed the data into the PyTorch model to evaluate whether or not the file contained the wake word.

The result of the model evaluation is then returned from the API and passed down to the client in order to show whether or not the audio segment just recorded contained the wake word!

The final thing I needed to do send this information to the server periodically. In order to do this, I used setInterval with a delay of 2 seconds to periodically call .start() and .stop() on the MediaRecorder to ensure that the .onStop method was called every 2 seconds. This would result in audio data being uploaded to the server every 2 seconds.